Building AI That Doesn't Break in Production

bem is an event-driven, function-based AI platform. That's uncommon, but so is AI in critical production environments.

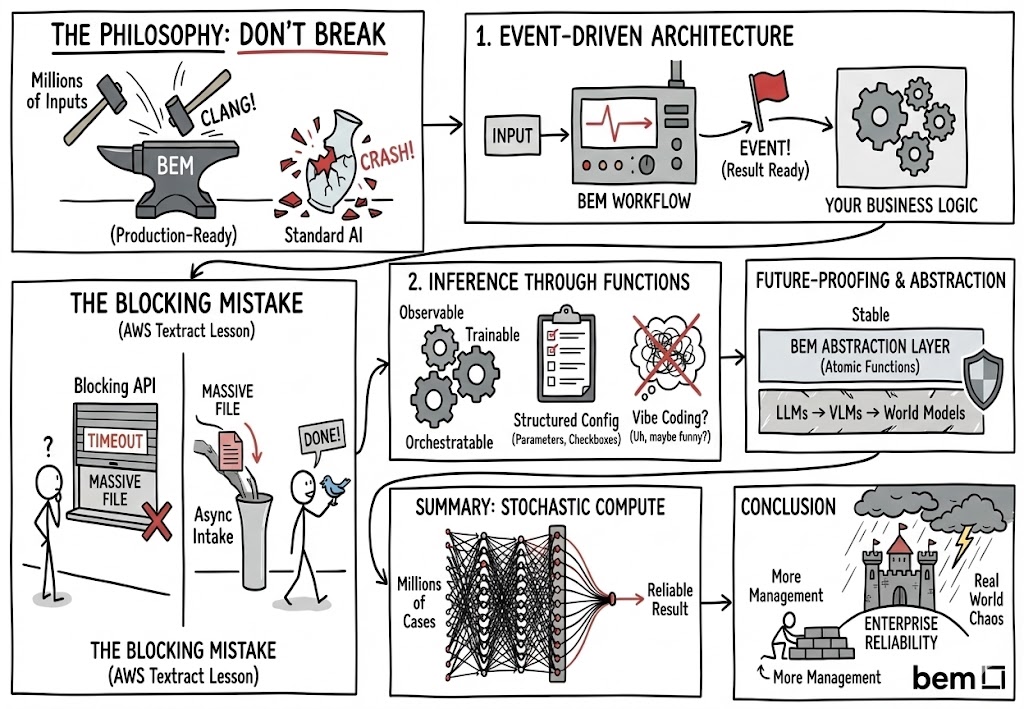

bem is an opinionated system, both in its architecture and in the design of the interfaces users utilize to integrate with it. Every decision we have made, from interface design to internal architecture, is in service of one specific goal: building AI that doesn't break in production.

We aim to support critical environments with millions of daily inputs that need to respond correctly every single time. To achieve this, we prioritize two key design decisions that distinguish bem from standard AI wrappers: Asynchronous Event Loops and Atomic Functions.

1. An Event-Driven Architecture

First and foremost, bem is an event-driven system designed to let you react with your own business logic to receive results from workflows.

We have frequently received feature requests to make bem’s API a blocking asynchronous fetch. We simply don't think that makes sense in the context of a reliable application. When we started building bem two years ago, we researched existing fuzzy APIs. AWS Textract served as a prime example of bad design: if you send a massive file to their blocking API, it can simply time out without a result or explanation.

Because we process data before our users even see it, we don't always know the input size, and often, neither do the users. To ensure resiliency, we mandate an asynchronous approach.

How it works

Instead of holding a connection open and hoping it doesn't time out, the bem architecture requires two distinct steps:

- Dispatch: You trigger a Call to process your data. This is non-blocking and returns immediately, allowing you to fire thousands of inputs into the system simultaneously without degrading performance.

- React: You create a Subscription that listens for completion. When the work is done, bem pushes the result to your system via event webhooks.

While we may eventually explore WebSockets for real-time streams, there is currently no true use case for bem to function as a fragile, synchronous fetch call.

2. Inference Through Functions

Second, bem’s access to inference is handled through Functions. These primitives are observable, trainable, and fully orchestratable.

They do not use standard prompts. They have an inherent configuration that should be iterated upon in a structured way, rather than through unstructured "vibe coding." As technology improves, leaving LLMs and perhaps even transformer models behind, we need an API pattern resilient to model changes.

Configuration over Conversation

Whether the future lies in latent space inference or world models, we build layers of abstraction that keep your application safe. We are betting that future inference access will not be through turn-based chat systems, but through atomic units of functionality.

- You create Functions that act as strict contracts between your code and the probabilistic model.

- You orchestrate Workflows to chain these functions together, ensuring that your business logic remains decoupled from the underlying model infrastructure.

Summary: AI as Stochastic Compute

To us, AI is nothing more than an amalgamation of technologies culminating in one simple concept: stochastic compute. It is essentially a switch-case statement with millions of cases, the result of decades of machine learning research used to create true, fuzzy business logic.

We believe these design decisions create systems that are integrable with enterprise resources, accurate, and reliable. While we have received some pushback from smaller companies and hobbyist developers who prefer blocking calls and chat prompts, we believe that even if this requires more management on the user's end, it results in:

- Incredibly reliable applications.

- Better user experiences.

- Greater resiliency on both the infrastructure and application layers.

As bem continues to evolve, we will continue making architecture decisions that prioritize our users' needs for reliability, accuracy, and security.

Start to see it in action?

Talk to our team to walk through how bem can work inside your stack.